Finalization of one of our prototypes for an immersive and collaborative virtual theater experiences

Immersive and spatial computing offer new possibilities to experience real stage events from home. We present a technical concept for experiencing theater performances using virtual reality headsets. For this purpose, we fuse a virtual theater model with real stage content of a theater performance.

An Immersive and Collaborative Virtual Theater Experiences

The goal of the research work is to design and implement a virtual reality (VR) application including a recording and processing pipeline for the immersive experience of real stage events using the example of theater performances.

In order to fulfill the requirements of a high level of presence experience and a low-threshold technical implementation at the same time, a hybrid format is proposed in the following that fuses virtual content with video recordings from the stage. Research in other contexts already showed good values for the presence experience in the case of computer-generated 3D content fused with video recordings [9]. Our approach transfers and extends this concept to the experience of real stage events and implements it into an overall social experience. In the following, the approach is presented using the Chemnitz Opera House as an example.

Insights into our virtual theater experience

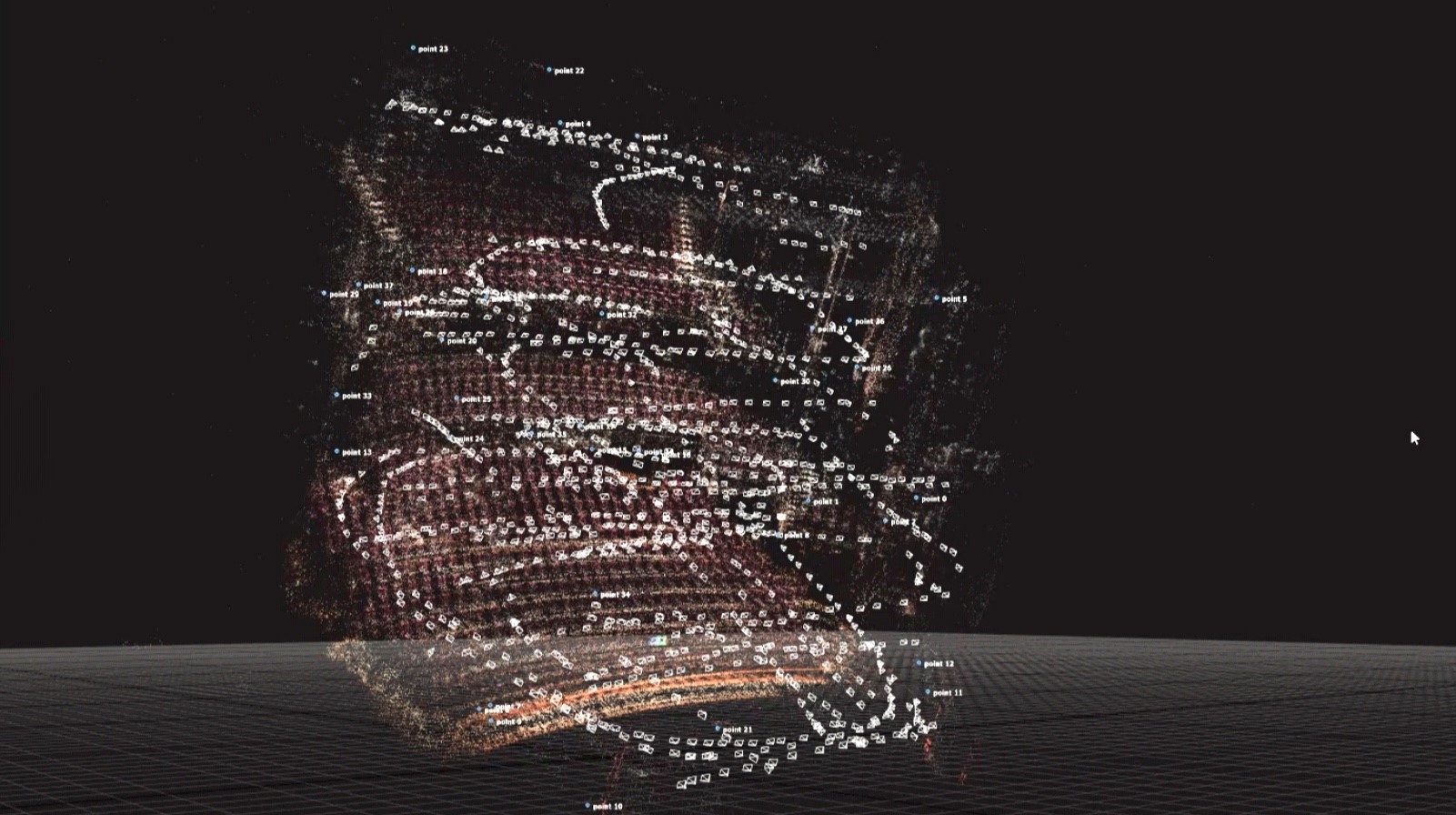

3D point cloud of the Chemnitz Opera House (shading in the background) based on photogrammetry und final virtual Modell

Virtual auditorium

For the highest possible immersion, but also to be able to realize multi-player functions, the entire theater space was remodeled in 3D. Photogrammetry methods were used for this purpose, the result of which was subsequently post-processed manually for the highest possible immersion and an optimized data set. It was important to keep the exact geometries of the stage itself and to the viewing axis of the later viewing perspective. The entire scene was integrated into a Unity project. For performance reasons, the audience seats on which the avatars will later be seated were modeled separately and automatically duplicated and positioned as a 3D model throughout the room using a script. A lighting concept enables the context-sensitive adjustment of the lighting conditions in the room. Special adjustments were made to the stage space in order to optimize the integration of the video recording.

Integrated video recordings

The stage content of the event to be broadcast is recorded with camera systems on site and integrated into the virtual stage space (see figure 2a). Since simple video recordings usually do not contain depth information, immersion breaks can occur when integrating corresponding recordings into a virtual environment with 6-DoF. There is a risk that the content appears like a cinema screen from the viewer’s perspective and is thus not perceived as part of the space. In order to create immersive experiences with video recordings as well, two approaches are used. The easy way concerns the recording of stereoscopic video by means of two slightly offset cameras from the perspective of the later viewing point in the virtual auditorium (see figure 2b and c). This allows a stereoscopic but no parallax effect. This means that the representation of the scene always remains the same, even if the viewer moves spatially. However, these effects can be less pronounced, especially when there are larger distances between the content and the viewer and when there are only small movements. The advantages of this approach are the good availability of camera setups and the simpler transmission process, which can also be implemented well in real time. This setup only requires on-site calibration and careful integration into the VR scene. For example, shaders provide an unobtrusive transition between the video edge and the stage space.

a) separation of the areas of the virtual space and the video of the real stage recording, b) stereoscopic camera setup, c) stereoscopic integration, d) camera array for light field recording, e) light field from a "wrong" perspective shows clear artifacts, but also the depth of the objects for parallax effect, f) light field from the perspective of the camera position shows correct representation

The second way uses an adapted light field technology. For this purpose, the scene is recorded from several slightly offset perspectives and then processed mechanically. Ideally, a large number of camera positions would be used for the recording so that all light rays emanating from the scene can be recorded. This would allow to generate a correct image for any viewer position. However, this is very complex and does not meet the requirements described. However, since the viewer usually consumes the content while sitting, a correct image model from all perspectives is not necessary. Therefore, the use of a small camera array that records slightly offset perspectives from a specific sitting position is proposed (see figure 2d). The differences in the images created by the parallax effect are used to determine depth information. This is then used to transform the video content into a 3D model. By adapting the size of the camera array to the desired radius of movement of the viewer, good results can be achieved even with small arrays (see figure 2e and f). The disadvantage of this approach is the higher processing effort and the significantly higher data volumes compared to a stereo recording. This poses major challenges, especially for real-time transmission at live events. In addition, the conversion of real recordings into 3D models is not free of errors, resulting in artifacts that can have a negative impact on the experience.

View of the multiplayer environment and the avtars for direct social interaction

Multiplayer mode

It can be assumed that only the shared experience of the virtual event with other people creates a clear added value for the broad-based use of corresponding applications. In particular, the spectator backdrop, which is missing due to the chosen setup, must also be replaced. A distinction can be made between the direct collaboration scenario and an indirect collaboration scenario. In the direct collaboration scenario, familiar people meet in virtual space to experience the event together and exchange ideas. The indirect scenario concerns the viewers with whom no direct interaction is to take place. Previous social VR applications are characterized by the fact that a certain number of participants can dial into an instance and interact with each other without restrictions. The disadvantage here is that, on one hand, for performance reasons these groups are poorly scalable, which would lead to a poor experience, especially for large events. On the other hand, it is not context-relevant to enter into direct exchange with all spectators. On the contrary, disruptive influences can also occur here, which strongly worsen the experience.

For these reasons, a separation between intended interaction partners and the rest of the audience was made in the present implementation. For this purpose, multi-user functions for the direct transmission of motion data and audio were integrated on the basis of the Photon multi-player framework. The avatar system of ReadyPlayerMe was used for the representation of the persons as well as the own avatar. For the visualization of the rest of the audience we used different animated avatars for the closer environment. To optimize performance, we used perspective-correct video animations for avatars further away.