Innovative workflow for integrating stereo videos into virtual environments

Compared to traditional stereo movies for cinema or television, a more complex compositing approach is necessary to seamlessly integrate the stereo content into the VR environment. We have come up with a rigorous method how to achieve this integration with high quality. We believe that this opens new applications to current broadcasting infrastructure.

The challenges of integrating stereo video into virtual environments

To integrate stereo videos into a virtual room, they must be projected onto two virtual projection surfaces. While this sounds simple, but it very difficult to manually place the screens to reach a good viewing experience. This is because wrong screen placement may cause the perceived depth from the stereo video to conflict with the depth from the 3D model of the event location. Then it may for instance happen that the actor visible in front of a wall may appear to be behind the wall, because the perceived depth is too large. This easily leads to VR sickness or eyestrain. This is a new challenge compared to traditional stereo movie production. In the latter, the stereo playback must only look pleasant, but does not need to fit with any virtual environment.

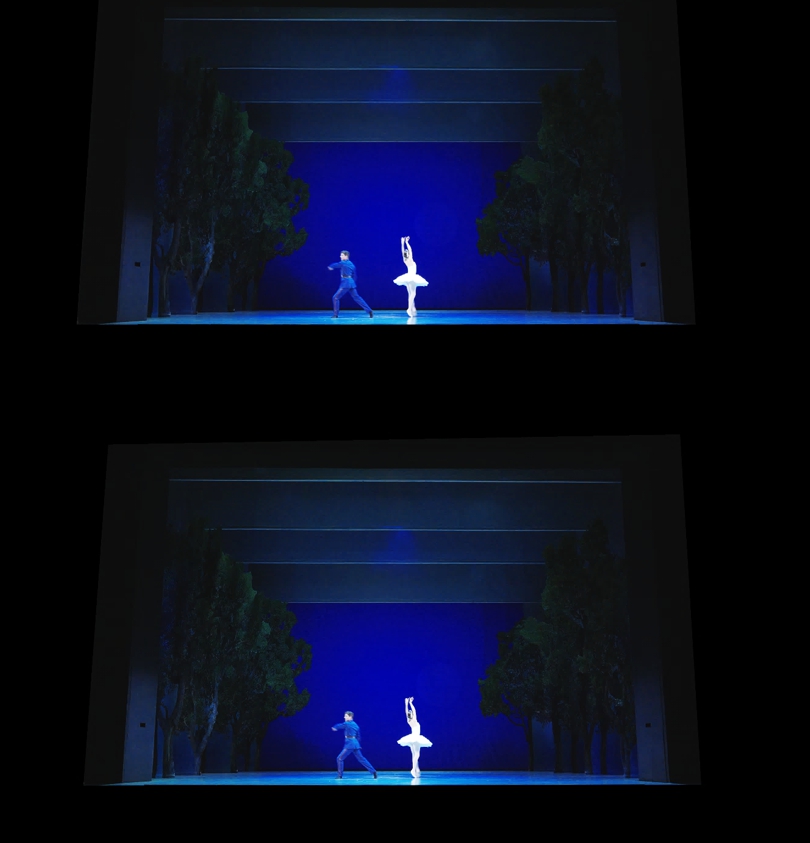

Stereo video of a theater performance integrated into a virtual theater

Theoretically, the described problem can easily be avoided if we have a perfect 3D model of the venue and all intrinsic and extrinsic parameters of the camera system and the projectors match perfectly. In practice, none of these constraints can be easily met. First, we typically do not know intrinsic and extrinsic camera parameters with sufficient precision relative to the coordinate system of the 3D model of the event location. And while there exist algorithms to perform camera calibration, they only calibrate towards a relative coordinate system, and not towards the absolute one of the event location. Even worse, some camera parameters such as the position of the sensor center relative to the optical axis are difficult to determine reliably, although being crucial for the perceived depth impression. Moreover, practical constraints like camera body sizes prevent the two stereo cameras from having exact inter-eye distance. In addition, the location of the stereo cameras may deviate from the eye position of the virtual observer. And finally, for artistic reasons it may be desired to increase the perceived depth impression compared to the perfectly realistic reproduction.

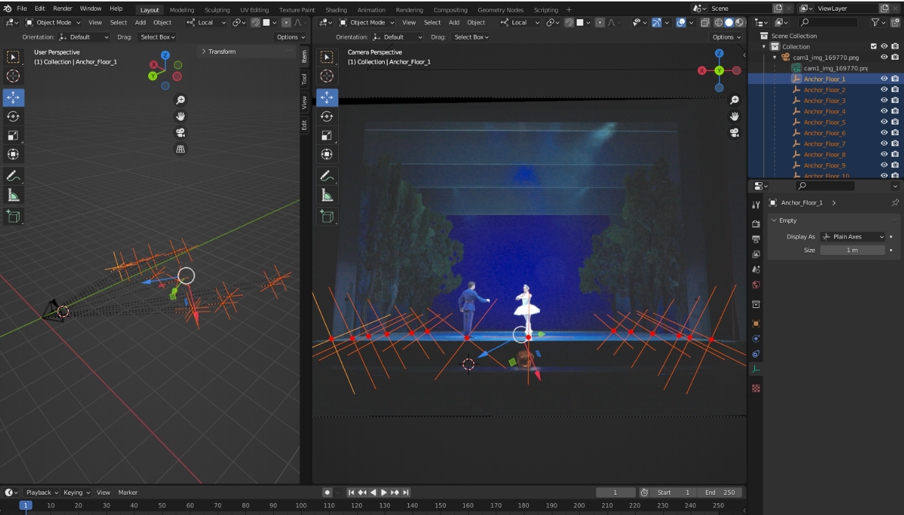

Definition of the reference points in Blender. On the right side, we look through one of the cameras. Red points identify reference points. On the left side we see the projected points in 3D space.

Referencing the video recordings

We have created a workflow using Matlab and Blender to solution this problem. We first rectify the input videos using image correspondences. By these means, we can correct the images such that they correspond to two stereo cameras with perfectly parallel optical axes. This is important, because even with careful mechanical design of the stereo rig, imprecisions during manufacturing and assembly cause that such an alignment is hard to achieve with a precision of a single pixel. Then, we import the stereo video into Blender. With the help of a custom plugin, we can then mark reference points in both the left and right image. Then, each reference point is projected into the 3D space. By these means, we simulate where an observer located at the position of the stereo camera would see the reference point in 3D space.

Simulate the perceived impression

We create a custom Blender plugin allows us to simulate how changing the stereo parameters will impact the perceived impression by the virtual observers. In more detail, our Blender workflow allows to simulate the change of the stereo projector location and orientation, the stereo baseline, the focal length, the position of the sensor center relative to the optical axis, the sensor offset (difference of the sensor centers’ positions between the two cameras in the direction of the baseline), the impact of a mismatch between the observer’s eyes and the projectors, and the distance between the projection screen and the projectors.

Stereo video of a theater performance integrated into a virtual theater

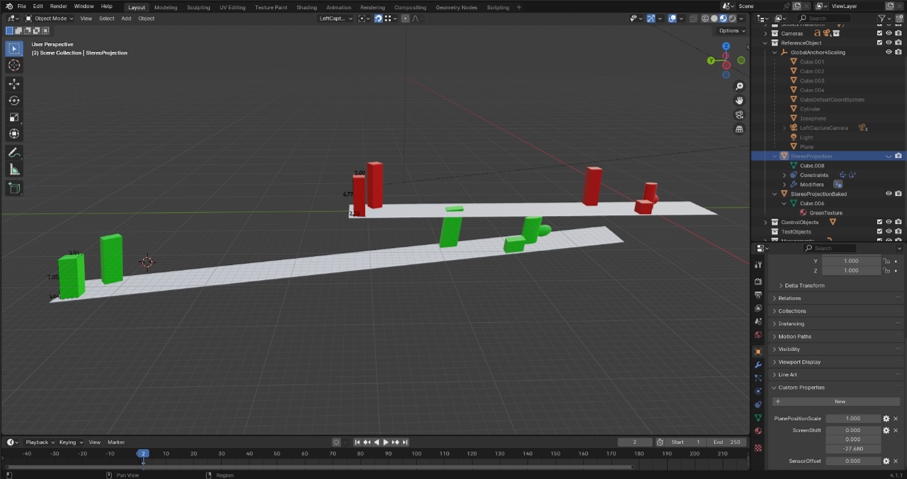

Each of these parameters can be set in Blender, and our plugin transforms the original remodeled theater geometry into the new geometry which corresponds to the perception by the observer. The Figure illustrates a synthetic example scene to visualize the principles. The original scene consists of a floor plane and a couple of cubes and cylinders (red) that are supposed to be recorded by a stereo camera. Then our Blender plugin computes a new 3D model (green objects) corresponding to what a user perceives when the stereo parameters are modified as desired. This approach also allows us to detect illegal stereo parameters causing negative disparity. Such a scenario requires an observer to squint outwards, which is very unnatural and results in eyestrain. The sensor offset and the mismatch between the position of the user’s eyes and the recording stereo cameras are critical parameters in this regard.

Adjustment of parameters for optimized stereo impression

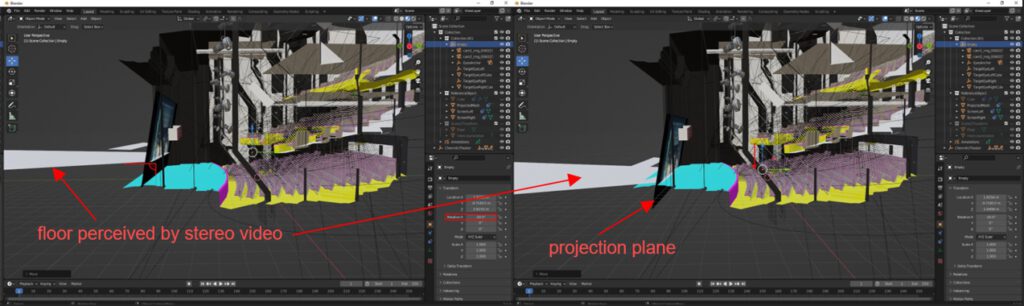

3D-modell of a theater in combination with a 3D-modell of the floor reconstructed from the stereo video. Left: Wrong stereo parameters cause a mismatch between CGI floor (blue) and the floor recorded by the stereo cameras (gray plane). Right: Smooth transition of the stereo floor and the CGI floor.

By these means, we can adjust the parameters in such a way that the perceived stereo impression matches with the 3D-model of the theater. The left setup in the figure, for instance, causes a gap between the stereo and the CGI floor, leading to an odd user perception. This can be fixed by moving and rotating the stereo projectors. Lastly, we need to define the position of the projection planes by moving them in the camera frustum. To avoid inconsistencies when the observer moves his or her head, they should be as close as possible to the transition between the CGI and the stereo objects (see figure right). Moreover, relevant video content must not be occluded by the 3D-model of the floor or any other element of the event location.

Integration into a unity environment

Once the projection screens have been correctly placed relative to the 3D-model of the event location, they can be exported from Blender and imported into Unity, which we use for the overall VR application. The rectified stereo videos are encoded in a single movie file by vertically packing the left and the right image into one frame. It is then assigned as texture to both projection planes. By adjusting the UV-map of each projection plane, we ensure that the left image is visualized on the left projection plane, and the right image is visualized on the right projection plane. For decoding the video itself, we use standard Unity tools.