Transmission of video recordings in virtual environments

The integration of stage recordings of real events in form of video is an easy way to extend real events in virtual spaces and to make them experienceable regardless of the location. But video recordings do not allow the perception of depth, which can lead to immersion breaks in the virtual environment. Stereoscopic videos and light field recordings can mitigate or even prevent this effect.

Stereoscopy and parallax as a prerequisite for spatial presence

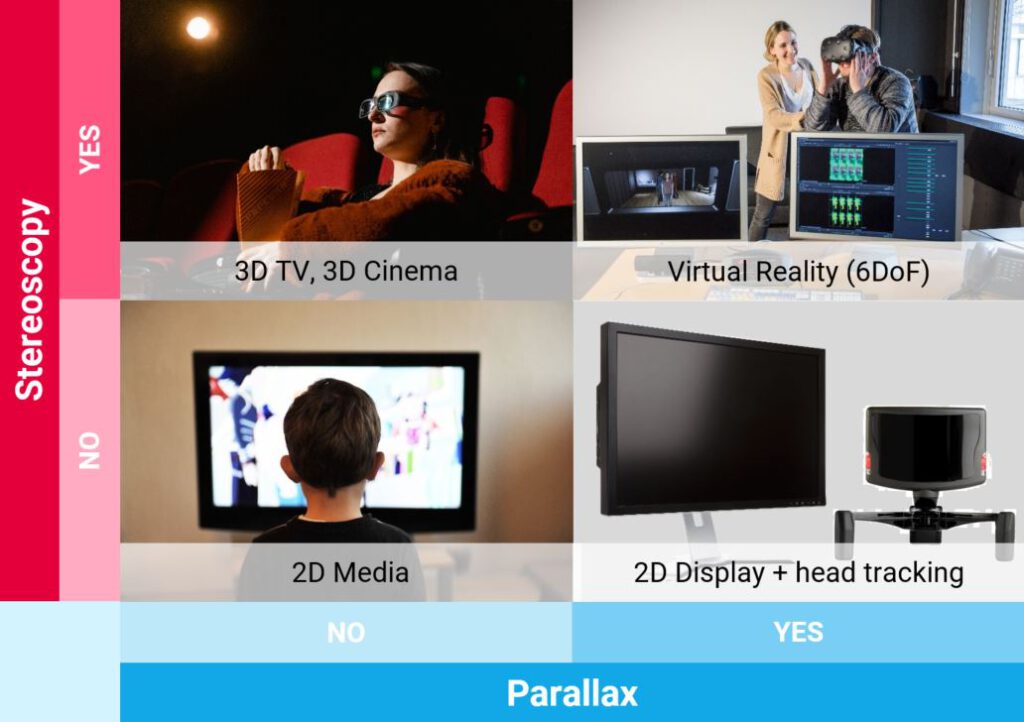

The spatial experience of presence is significantly determined by the visual perception of spatiality. We feel present in a virtual environment when we perceive it as a space. This is mainly due to 3D vision, which in turn requires a stereoscopic and parallax effect. Stereoscopy means the presentation of two images for the left and right eye, which are slightly offset in their perspective. This creates the perception of depth. The parallax effect occurs when the viewer changes perspective (i.e. moves). As the viewer moves, objects at different distances appear to shift in their position in relation to each other. Both stereoscopy and the parallax effect allow the viewer to create a sense of distance between the objects being viewed and thus enable spatial vision.

Effect of parallax © Fraunhofer IIS

Classification of 2D and 3D based media technologies in distinction of the use of stereoscopy and parallax

Virtual reality enables immersive experiences with six degrees of freedom

Current media technologies are predominantly categorized as 2D and therefore do not create a spatial experience of presence. One does only look at it from the “outside“ and is not part of it. The use of virtual reality glasses enables the display of separate content for each eye and detects the movement of the viewer, allowing the correct parallax to be displayed. This means that when the viewers move in space (i.e. not only turn their heads but move forward, backwards or sideways – 6 degrees of freedom (6-DoF)), they see the environment and its content from different perspectives. While this is easily possible for virtual computer-generated content and environments, it poses major hurdles for the implementation of virtual live events. This is because real content, such as stage shows, cannot simply be rendered in 3D software.

Real video recordings as a simple way of transferring stage content into virtual environments

Recording stage content using real video recordings is a simple way to bring it into the virtual environment. Other possibilities, such as the virtualisation of content with motion capturing technologies, require the prior virtualisation of objects and people as 3D models and usually necessitate a “studio setup“. Cameras, on the other hand, can simply be set up on site and do not restrict the experience of the real live events. However, video recordings usually do not contain depth information. When integrating corresponding recordings into a virtual environment with 6-DoF, there will inevitably be a break in immersion. There is a risk that, from the viewer’s perspective, the content appears like a cinema screen and therefore is not perceived as part of the space.

Composition of the virtual theater experience consisting of the virtual 6DoF-enabled environment and video-based real recordings of the stage content

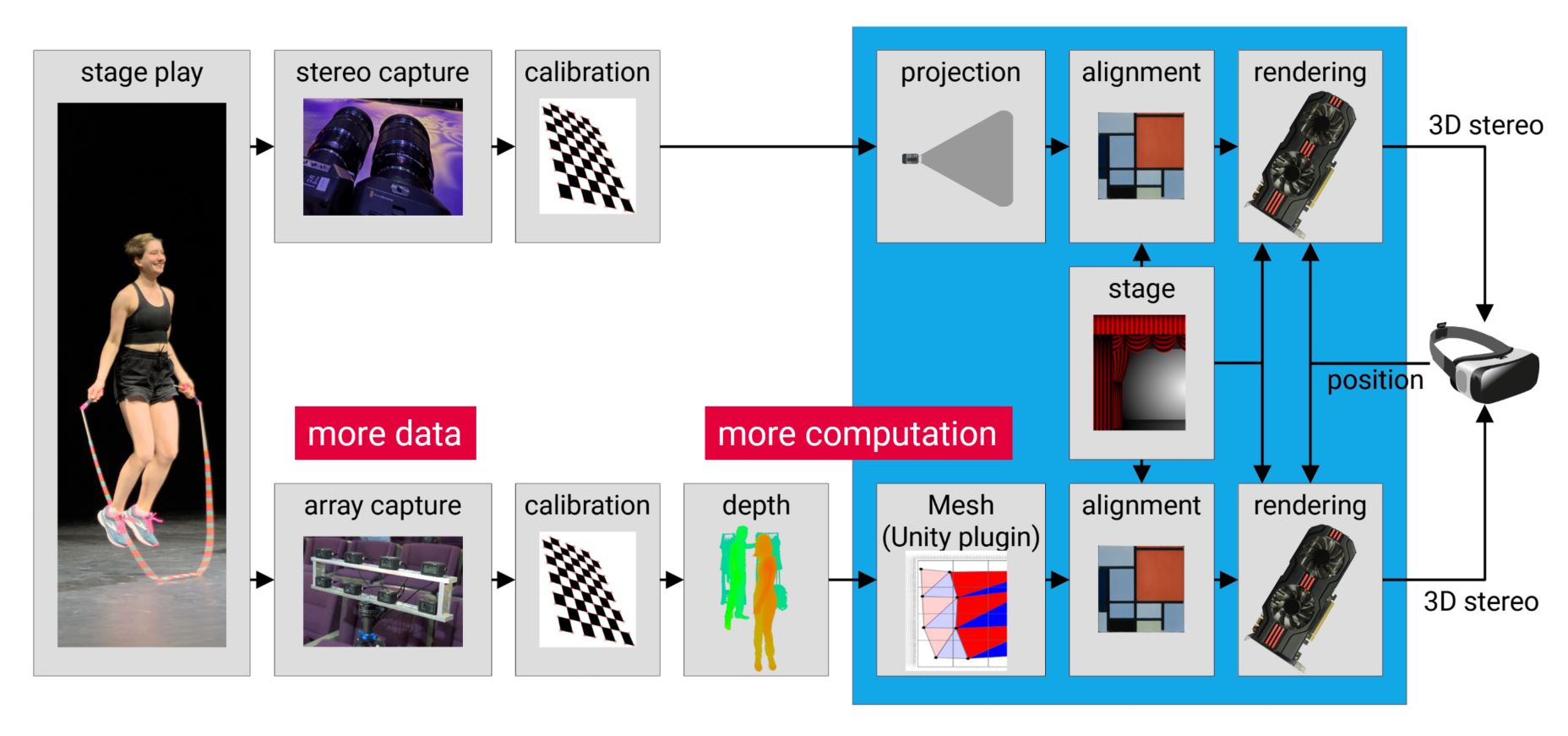

Two ways of transmitting stage content with real video recordings

In order to create immersive experiences with real video recordings as well, we pursue two approaches. The simple way is to record stereoscopic footage using two cameras with a slight offset. This allows a stereoscopic but no parallax effect. The setup only requires on-site calibration and careful integration into the VR scene, for example by rendering the images onto virtual screens for the right and left eye. A camera array, on the other hand, can be used to create a small light field that is 6-DoF capable. From a conceptual point of view, this allows for better integration into the virtual world, so that an immersion break can in principle be avoided even better. However, this approach requires significantly more data volumes as well as computing efforts. Further, achieving artefact-free rendering is associated with major challenges.

Comparison of the process chains for the transmission of stereoscopic video and a sparse light field

Use of stereoscopic recordings

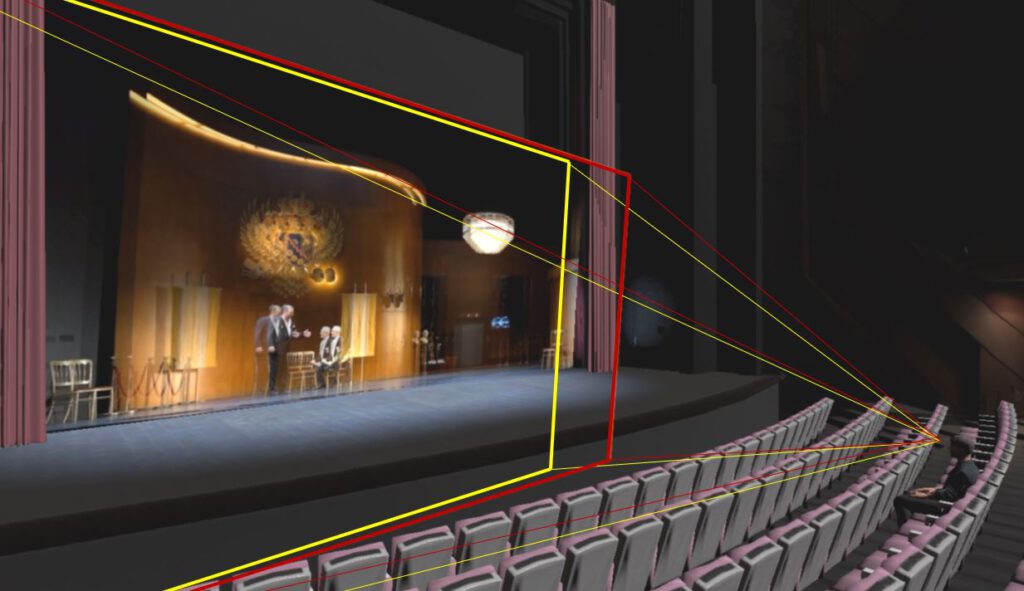

Using a stereoscopic camera setup, video content can be integrated into VR with a good depth effect. The visual integration of the footage presents a challenge so that it does not appear like a cinema screen. Nevertheless, the lack of parallax counteracts the impression that the stage content is part of the environment. This means that the representation of the scene always remains the same even if the viewer moves spatially. Particularly in the case of larger distances between the content and the viewer, as well as in the case of only small movements, these effects can be less pronounced, however, making the setup a good alternative. The advantages are the good availability of the camera setups and the simpler transmission process, which can also be implemented well in real time.

View into the virtual opera house with two planes for the integration of the stereoscopic video material

View into the virtual opera house on the stage with the thin light field; the light field creates a correct stereoscopic representation with parallax from the viewer's field of view

Use of a sparse light field

With the help of a camera array, depth information of the scene can be determined in a post-processing procedure. Ideally, a large number of camera positions would be used for the recording so that all light rays emanating from the scene can be recorded. This would allow a correct image to be generated for any viewer position. However, in practice, a high number of cameras is not practical. Therefore, a sparsely sampled light field is being used instead and the missing camera positions are interpolated using a 3D model. The differences in the images caused by the parallax effect are used to determine depth information. This is then used to transform the video content into a 3D model. By adapting the size of the camera array to the desired radius of movement of the viewer, good results can be achieved even with small arrays. The disadvantage of this approach is the higher processing effort and significantly higher data volumes compared to a stereo recording. This poses a great challenge, especially for real-time transmission of live events. In addition, the transfer of the real recordings into 3D models is not free of errors, resulting in artefacts that can have a negative effect on the experience.